1. Not all branded products supported

2. Not all common food items supported

3. Lack of knowledge of common names in other languages / cultures

4. UPC codes and nutrition information not complete

We recently started working to solve this problem and have been able to solve most of them coupled with Image detection of food items with more than 80% accuracy. See the demo below:

Most popular items that image detection supports:

Apple, orange, pear, pomegranate, grapes, grapefruit, cranberries, cherries, raspberries, strawberries, watermelon, beets, bell peppers, red cabbage, cabbage, radishes, radicchio, red onions, onions, potatoes, tomatoes, apricots, cantaloupe, mango, nectarines, peaches, papaya, tangerines, squash, carrots, pumpkin, sweet potatoes, figs, lemons, kiwi, pineapple, corn, avocado, limes, broccoli, asparagus, brussels sprouts, green beans, kale, cucumber, celery, honeydew melon, banana, dates, garlic, ginger, mushrooms, blackberries, blueberries, plums, eggplant

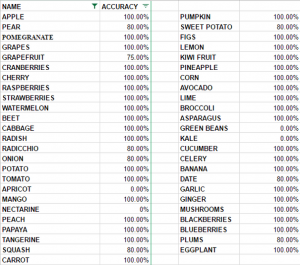

Here is the accuracy chart in our recent test done with atleast 5 to 8 different images for each food item we got from Google images:

The system can support these types of searches:

– Branded Food items

– Common Food items

– Food logs / Diary type entries (ideal for chatbot like interface)

– Food names in their local language (for increased accuracy)